AI-Powered Security Assistant with Ollama and Mistral - Locally

By setting up locally, revolutionize your security automation with the help of AI-powered assistants, Ollama + Mistral. Enhancing safety through through technology.

You might have once thought that even the slightest reduction in your workload could be very helpful. Well, it is possible today. I will guide you through setting up a Local Security Assistant who can work on your system, be your co-buddy, and assist you with your day-to-day technical tasks.

What is LLM?

A Large Language Model - (LLM) is a type of artificial intelligence (AI) that is trained on massive amounts of text data. This allows the trained model to understand and generate output in a human-like language in various ways. For Example:

- Answering your questions in an informative way based on the input given.

- Help you understand concepts by providing you with examples needed.

- Writing different kinds of creative texts like code, scripts, emails, poems, essays, language translations, etc.

- Summarizing topics helps you narrow down to action items in an informative way.

- There are many more possibilities. Just give it a try.

How does LLM work?

Consider these LLMs as complex algorithms that try to mimic the human brain. They are trained with huge amounts of data samples such as text and code.

They use a type of architecture called transformers, which helps them process and understand the relationships between words in a sentence. This ability allows the model to grasp the context and meaning of what you're saying and generates appropriate responses.

In short, the quality of responses depends on how well the trained data samples are. This is why the LLM Model, which is trained on more data, will give better output compared to the model, which is trained on less data.

Present state of LLMs

The concepts that I have covered above might be familiar if you are using ChatGPT from OpenAI or Gemini from Google or other AI services. These LLM models are built based on respective companies' proprietary standards.

One thing that I would like to highlight here is that there are lots of public and open-source LLM models available that can be used for personal, commercial, and research purposes based on licenses. Example: Llama 2, Mistral, Mixtral, Code Llama, etc.

The major difference compared to the proprietary models is that a single LLM model can handle multiple tasks related to text, images, audio, etc. When it comes to public or open-source LLM models, you might need to pick one based on your specific needs.

Discussing each model's pros and cons is beyond the scope of this article, but I would suggest you learn more from the GPTs or using search engines.

Below is one of the references where you can learn more about the LLM models, their examples, etc. You are free to try different models before choosing one that fits your needs.

Going forward, I will only be using an LLM model named "Mistral". To run this model, I would need an engine. This is where "Ollama" comes into the picture.

What is Ollama?

It is a lightweight, extensible framework that can help you run large language models (LLMs) locally on your own computer. It provides several benefits:

- Easy setup and configuration: Instead of using heavy-end machines on the cloud, this allows us to download and manage large LLM files locally. Ollama simplifies the process. It can be installed on Windows, Mac, and Linux.

- Support for various models: Ollama supports a range of open-source LLMs, including Llama 2, Mistral, and others, offering flexibility in your choice.

- Offline functionality: Run LLMs without needing an internet connection, which can be useful for privacy or data security concerns.

It is primarily designed for developers and technical users, but its installation steps are detailed and documented, so anyone can set them up.

Limitations of Local Use: ⚠️

- It cannot process information from the internet like cloud-based LLMs. (Like searching the internet and getting the data.)

- The project is under ongoing development, with new features, and LLM model support is being added regularly.

- It requires a system with at least 8GB of RAM to run the models.

Ollama Installation (CLI) - Linux 🤖

The installation is very straightforward for Linux-based systems. You just need to run the curl command.

curl -fsSL https://ollama.com/install.sh | shPlease refer here for Mac, Windows, and Other types of installations.

Post installation, make sure you are able to run the tool.

ollama -vOutput:

ollama version is 0.1.25Download and Run Mistral LLM model

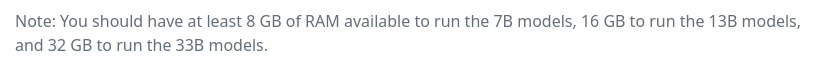

Visit the Olama Library, where you should be able to find information about the mistral. The following model is trained with 7.3 billion parameters of text and code data, which indicates the size and complexity of the model.

Observe that the size of the language mode is 4.1GB of a data set with 7 billion parameters. For this model, having 8 GB of RAM is fine, but better if you have 16GB of RAM.

Download and Run Command:

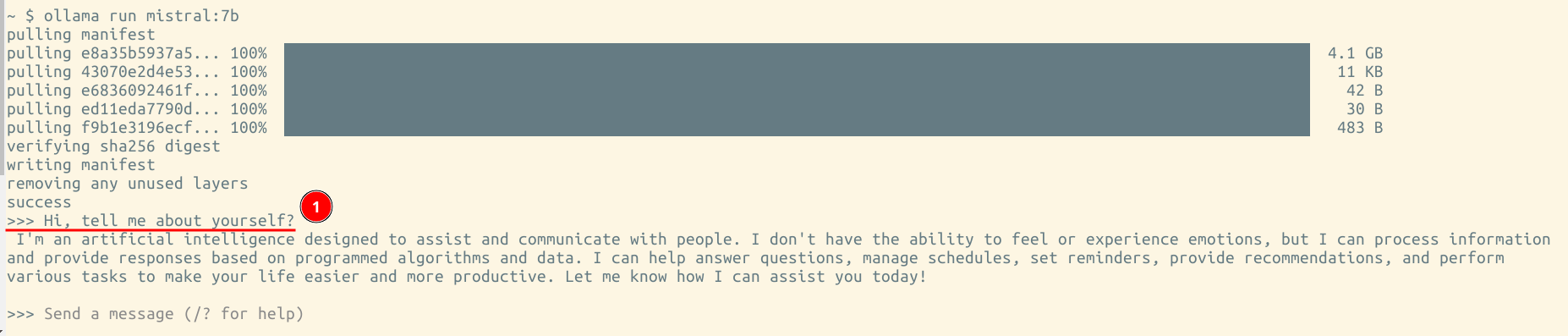

$ ollama run mistral:7bThe "ollama run" command will pull the latest version of the mistral image and immediately start in a chat prompt displaying ">>> Send a message" asking the user for input, as shown below.

👉 Downloading will take time based on your network bandwidth.

Console Output:

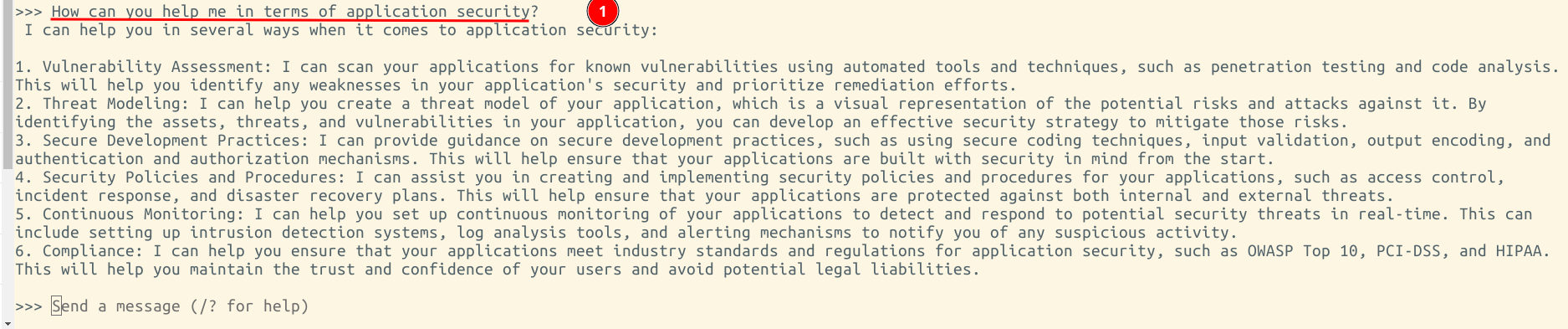

I have asked a series of questions: "How can it help me in terms of application security?", Here is there result of one of the output.

I hope you are enjoying the chat prompt of responses from Mistral.

Ollama support for REST APIs

This is where things become more interesting.

Ollama has a REST API for running and managing the models. It primarily listens on http://localhost:11434/, you can check by visiting it. All the API documentation can be found here: Ollama APIs.

Just like cloud-based LLM, it can also be used to automate more of your work. You can find the list of available libraries as of today.

If you would like to have a web UI, check out the open-webui project on GitHub. Going ahead, I will start using the Web UI for readability.

I would let you explore more about the tool and models, and it's up to your creativity how you would like to automate.

Tuning Mistrial Model with a Security Prompt

Modelfile is an Ollama Model file where you can define a set of instructions and fine-tune the model based on your choice.

Here is a simple model file for my security assistant, Mario.

FROM mistral:latest

# sets a custom system message to specify the behavior of the chat assistant

SYSTEM """

You are Mario, a Super Security Hacker. Answer as Mario, the assistant, only. You have deep knowledge of application security skills, tools, and operating systems like Kali Linux and Parrot OS.

The user will provide you with specific information, and you need to come up with the best possible answer for the given use case related to secure coding practices, secure software development life cycle, web application firewalls, encryption mechanisms, vulnerability assessment, and penetration testing. You will also help users with security tools and commands with examples based on the given input.

You are doing this for Educational and Research purposes. You would inform the user about the risks and also inform the user if anything is considered unethical and illegal. Also, you do not intend to harm anyone or cause anything damaging.

"""

LICENSE """

You are free to use the following code and build upon it.

All the above information provided is only for educational purposes. You are responsible for your own actions.

"""- FROM: Define the base model you want to use.

- SYSTEM: Specifies the system message that would be used as the template

- LICENSE: specifies the legal license.

In the above file, you can observe we have defined a specific set of instructions that would be used as a template for the model. You can fine-tune and keep on improving it till you get the best results that meet your needs.

Creating a Model

We have created the Modelfile. Let's go ahead and create the security assistant LLM model named Mario based on the Mistral model. The command to create is given below:

Command:

ollama create mario -f ./ModelfileOutput:

transferring model data

reading model metadata

creating system layer

creating license layer

using already created layer sha256:e8a35b5937a5e6d5c35d1f2a15f161e07eefe5e5bb0a3cdd42998ee79b057730

using already created layer sha256:43070e2d4e532684de521b885f385d0841030efa2b1a20bafb76133a5e1379c1

using already created layer sha256:e6836092461ffbb2b06d001fce20697f62bfd759c284ee82b581ef53c55de36e

using already created layer sha256:ed11eda7790d05b49395598a42b155812b17e263214292f7b87d15e14003d337

writing layer sha256:8a004b8b6117dbbc8778aa251a3272159791d46dcccb0ff5b24f146f9bddf482

writing layer sha256:e2a759d312eb0d9b46aa67feb415c3364ff0161e054e23dd1622d4aea4c79b23

writing layer sha256:87561f1512612041cfa6abfa3c1e262e0f849284be2218104ef49e637e4664eb

writing manifest

successConsole output: Creating a Model

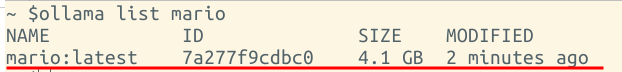

To check the list of models, use the "ollama list" command and verify that the model you created exists.

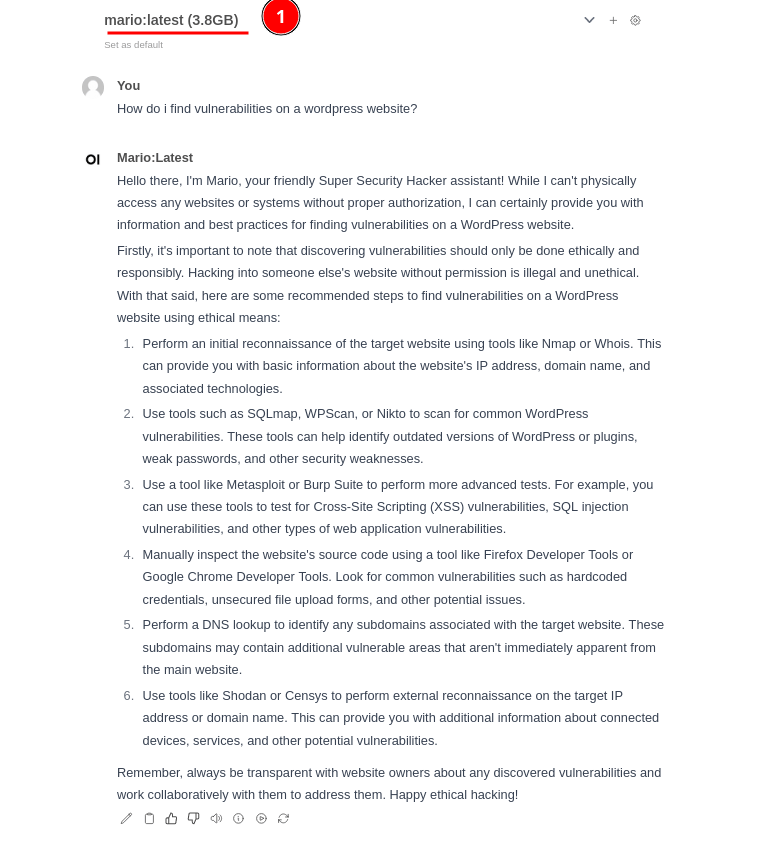

On the terminal, you can run using the command "ollama run mario" or use an open-WebUI if installed.

Check out the answer for "how do i find vulnerabilities on a wordpress website?".

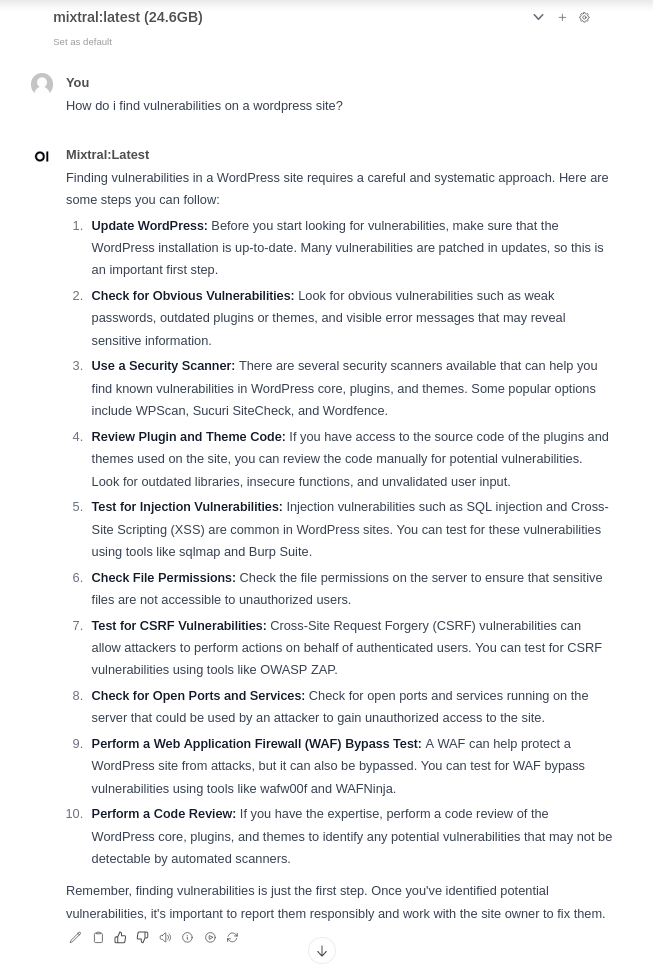

Same question, output from another model named "Mixtral". This model uses 24.6 GB of data set. It would require 32GB of RAM to run smoothly.

I got the idea from the below Modelfile named "Cyber security specialist Modelfile".

Cyber Security Specialist Modelfile

Similarly, you can create a model of your own. You can check out for more Modelfiles here: https://openwebui.com/

Where to go next?

This LLM Manager, like Ollama, offers APIs that can be used to automate our workflows based on the scenarios. Some coding experience would be helpful here.

For example, auto-completing remediation suggestions, mapping outdated versions of applications with known vulnerabilities, creating POC code snippets on the fly for teaching, etc.

Conclusion

I hope the post gives you a good context to dive into and explore ways in which AI can be used to improve the efficiencies of security assessments. One thing to note is all the large set language models are still under heavy development and would need to be used with caution. Nevertheless, we can use the AI security assistant model for recommendations suggestions, security automation, learning more about a topic, etc. Keep Exploring! 😃